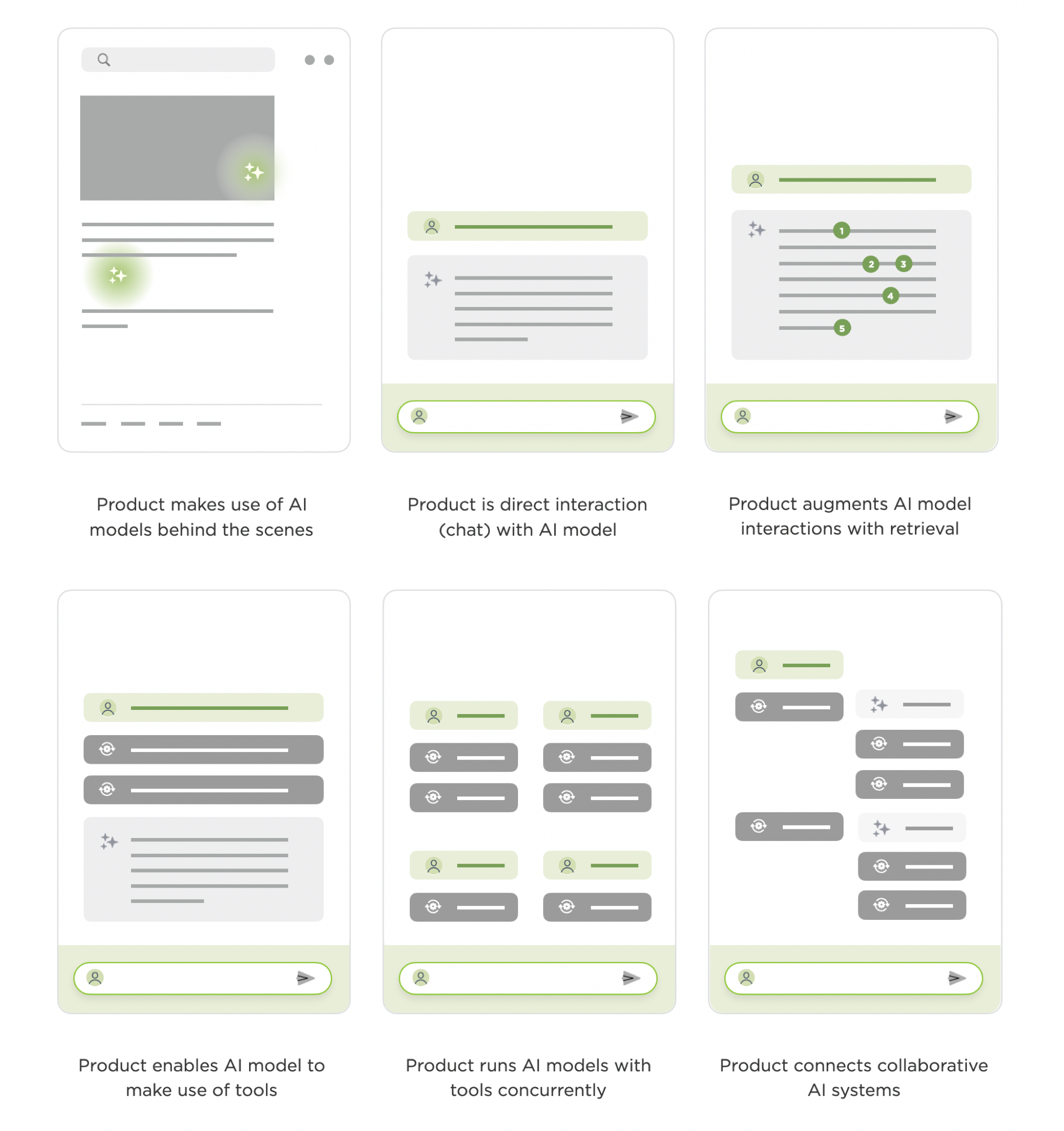

At this point, the use of artificial intelligence and machine learning models in software has a long history. But the past three years really accelerated the evolution of "AI products". From behind the scenes models to chat to agents, here's how I've seen things evolve for the AI-first companies we've built during this period.

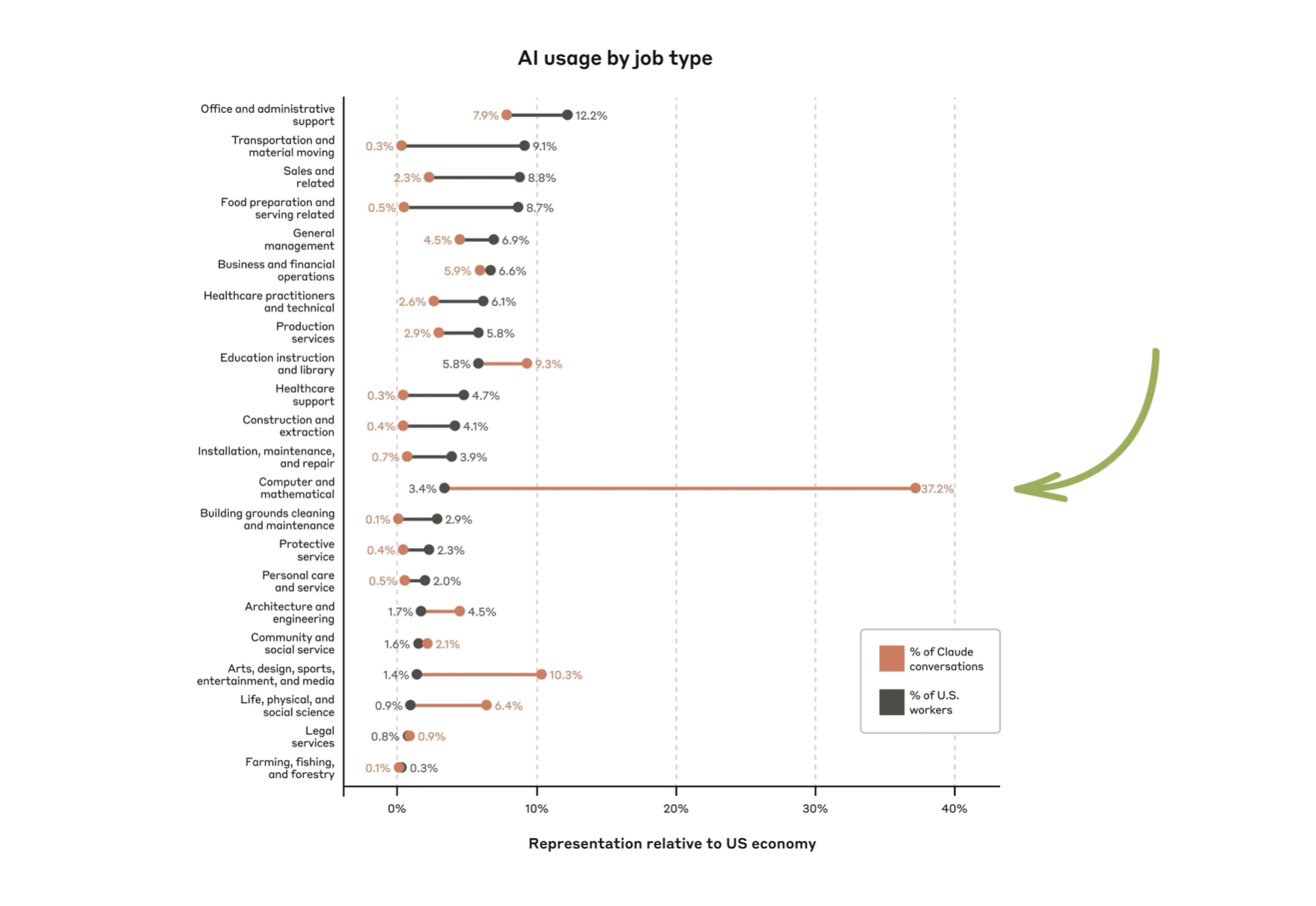

Anthropic, one of the World's leading AI labs, recently released data on what kinds of jobs make the most use of their foundation model, Claude. Computer and math use outpaced other jobs by a very wide margin which matches up with AI adoption by software engineers. To date, they've been the most open to not only trying AI but applying it to their daily tasks.

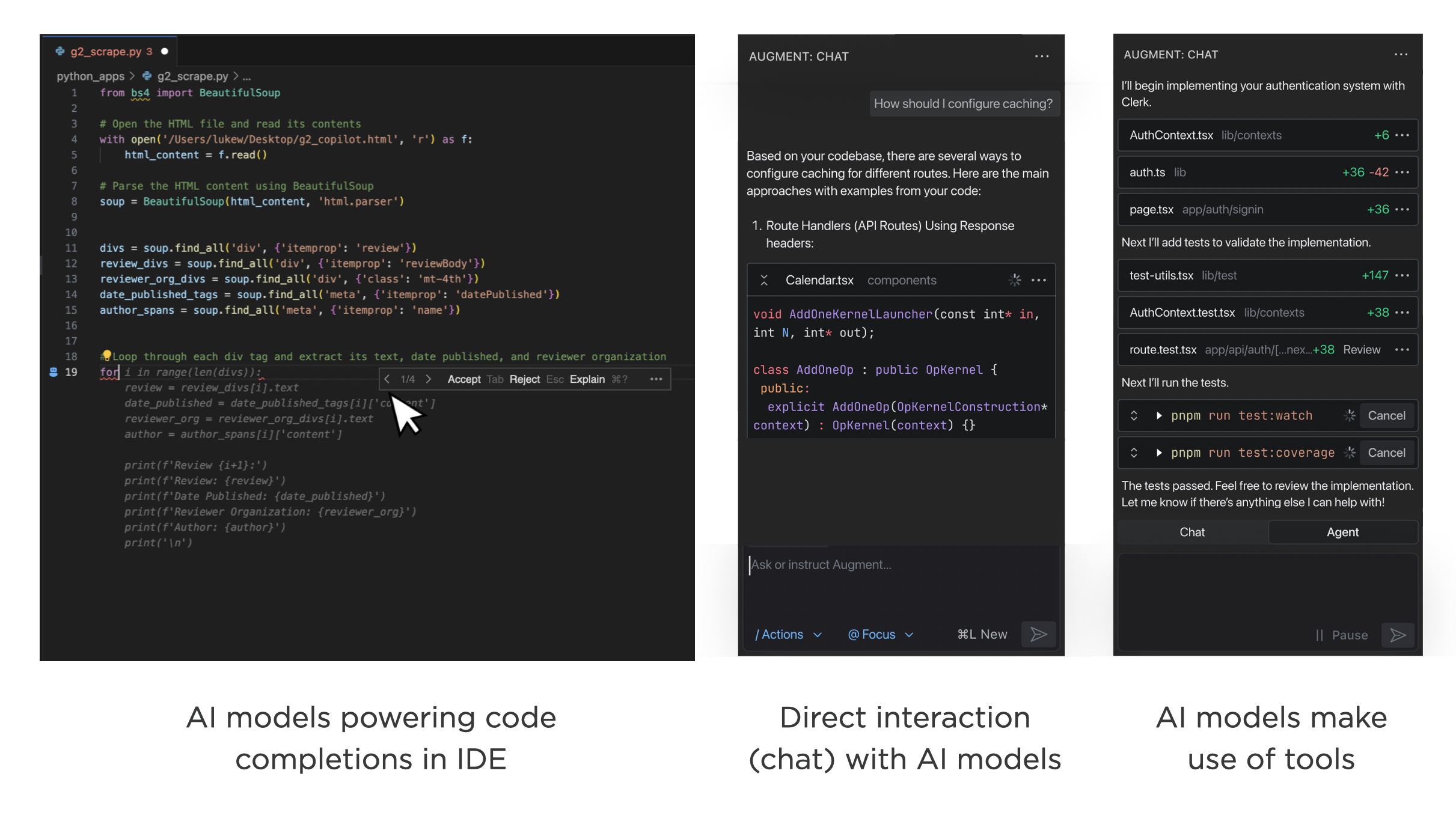

As such, the evolution of AI products is currently most clear in AI for coding companies like Augment. When Augment started over two years ago, they used AI models to power code completions in existing developer tools. A short time later, they launched a chat interface where developers could interact directly with AI models. Last month, they launched Augment Agent which pairs AI models with tools to get more things done. Their transition isn't an isolated example.

Machine Learning Behind the Scenes

Before everyone was creating chat interfaces and agents, large-scale machine learning systems were powering software interfaces behind the scenes. Back in 2016 Google Translate announced the use of deep learning to enable better translations across more languages. YouTube's video recommendations also dramatically improved the same year from deep learning techniques.

Although machine-learning and AI models were responsible for key parts of these product's overall experience, they remained in the background providing critical functionality but they did so indirectly.

Chat Interfaces to AI Models

The practice of directly interacting with AI models was mostly limited to research efforts until the launch of ChatGPT. All the sudden, millions of people were directly interacting with an AI model and the information found in its weights (think fuzzy database that accesses its information through complex predictive instead of simple look-up techniques).

ChatGPT was exactly that: one could chat with the GPT model trained by OpenAI. This brought AI models from the background of products to the foreground and led to an explosion of chat interfaces to text, image, video, and 3D models of various sizes.

Retrieval Augmented Products

Pretty quickly companies realized that AI models provided much better results if they were given more context. At first, this meant people writing prompts (or instructions for AI models) with more explicit intent and often increasing length. To scale this approach beyond prompting, retrieval-augmented-generation (RAG) products began to emerge.

My personal AI system, Ask LukeW, makes extensive use of indexing, retrieval, and re-ranking systems to create a product that serves as a natural language interface to my nearly 30 years of writings and talks. ChatGPT has also become retrieval-augmented product as it regularly makes use of Web search instead of just its weights when it responds to user instructions.

Tool Use & Foreground Agents

Though it can significantly improve AI products, information retrieval is only one tool that AI systems can now (with a few of the most recent foundation models) make use of. When AI models have access to a number of different tools and can plan which ones to use and how, things become agentic.

For instance our AI-powered workspace, Bench, has many tools it can use to retrieve information but also tools to fact-check data, do data analysis, generate Powerpoint decks, create images, and much more. In this type of product experience, people give AI models instructions. Then the models make plans, pick tools, configure them, and make use of the results to move on to the next step or not. People can steer or refine this process with user interface controls or, more commonly, further instructions.

Bench allows people to interrupt agentic process with natural language, to configure tool parameters and rerun them, select models to use with different tools and much more. But in the vast majority of cases, the system evaluates its options and makes these decisions itself to give people the best possible outcome.

Background Agents

When people first begin using agentic AI products, they tend to monitor and steer the system to make sure it's doing the things they asked for correctly. After a while though, confidence sets in and the work of monitoring AI models as they execute multi-step processes becomes a chore. You quickly get to wanting multiple process to run in the background and only bother you when they are done or need help. Enter... background agents.

AI products that make use of background agents, allow people to run multiple process in parallel, across devices, and even schedule them to run at specific times or with particular triggers. In these products, the interface needs to support monitoring and managing lots of agentic workflows concurrently instead of guiding one at a time.

Agent to Agent

So what's next? Once AI products can run multiple tasks themselves remotely, it feels like the inevitable next step is for these products to begin to collaborate and interact with each other. Google's recently announced Agent to Agent protocol is specifically designed to enable "multi-agent ecosystem across siloed data systems and applications." Does this result in very different product and UI experience? Probably. What does it look like? I don't know yet.

AI Product Evolution To Date

As it's highly unlikely the pace of change in AI products will slow down anytime soon. The evolution of AI products I outlined is a timestamp of where we are now. In fact, I put it all into one image for just that reason: to reference the "current" state of things. Pretty confident that I'll have to revisit this in the not too distant future...